5 Shocking AI Security Risks Insider Threats Deepfakes Devastating Businesses

When you typed ‘AI security risks insider threats deepfakes’ into Google at 1 a.m., you weren’t hunting for fluff—you needed answers fast. I’ve been there. Your CEO mentioned something about “shadow AI” in the board meeting, or maybe you read another headline about deepfake CEO scams costing millions. Now you’re wondering: what are the real threats, and how do you actually protect your organization?

You’re right to be concerned. AI security risks aren’t some distant sci-fi threat—they’re happening right now, and the latest government data is sobering. But here’s what most articles won’t tell you: understanding these threats isn’t rocket science, and neither is building your defense strategy.

AI Security Risks Insider Threats Deepfakes: The Devastating Reality

The 2025 IBM Cost of a Data Breach Report found that average global costs dropped to USD 4.44 million, but here’s the catch: organizations experiencing cyberattacks because of security issues with “shadow AI” faced attacks that cost an average of $670,000 more than breaches at firms with little or no shadow AI. This means AI security risks can push breach costs above $5 million per incident.

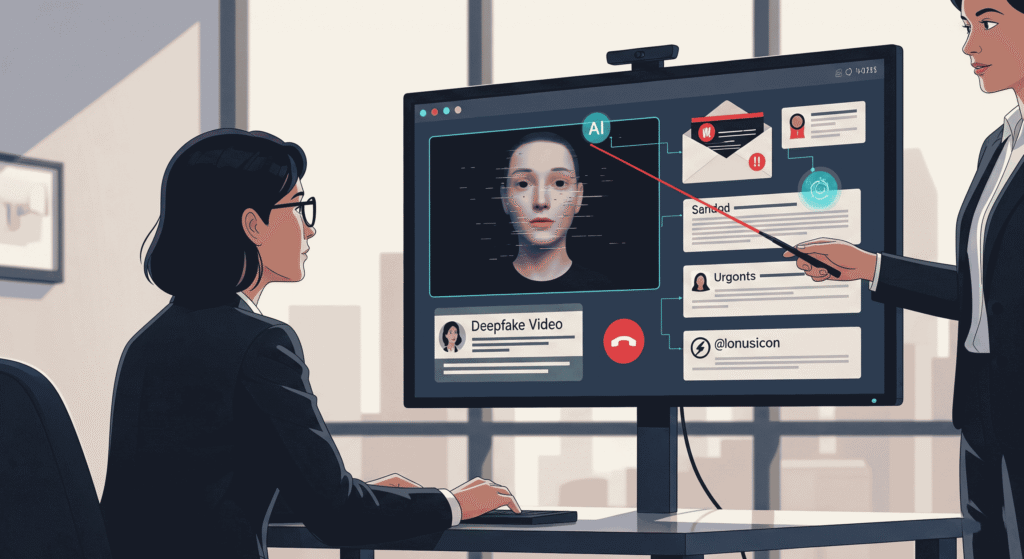

The National Security Agency (NSA), FBI, and CISA released guidance stating that threats from synthetic media, such as deepfakes, have exponentially increased—presenting a growing challenge for users of modern technology. These aren’t theoretical anymore—they’re targeting your business today.

The 5 Most Dangerous AI Security Risks Insider Threats Deepfakes

- Shadow AI Insider Threats: One in five organizations surveyed said they’d experienced a cyberattack because of security issues with “shadow AI”—employees using unauthorized AI tools that create backdoors for malicious actors

- Deepfake Executive Impersonation: Threats from synthetic media, such as deepfakes, present a growing challenge for National Security Systems, the Department of Defense, the Defense Industrial Base, and national critical infrastructure owners

- AI-Enhanced Social Engineering: Malicious actors leveraging AI to create hyper-personalized phishing attacks that study employee digital footprints and behavioral patterns

- Generative AI Data Poisoning: NIST released guidance on Generative Artificial Intelligence Profile to help organizations identify unique risks posed by generative AI, including model manipulation and data contamination

- AI-Native Malware Evolution: Self-adapting malicious code that learns from security responses and evolves attack strategies faster than traditional detection systems can respond

How AI Security Risks Insider Threats Deepfakes Impact Your Business

These aren’t abstract cyber-threats happening to “other companies.” When deepfakes target your executives during earnings calls, stock prices can plummet within hours. When shadow AI creates unauthorized access points, insider threats can exfiltrate terabytes of data before your security team even knows there’s a problem.

IBM’s findings show that ungoverned AI systems are more likely to be breached and more costly when they are. The financial impact extends beyond immediate losses—recovery time doubles because AI-enhanced attacks leave fewer forensic traces and often compromise multiple systems simultaneously.

According to the latest IBM research, those attacks cost an average of $670,000 more than breaches at firms with little or no shadow AI. This premium cost comes from the complexity of investigating AI-enhanced breaches and the extended remediation required to secure compromised AI systems.

Protecting Against AI Security Risks Insider Threats Deepfakes: Action Plan

- Implement Shadow AI Detection: Deploy monitoring systems to identify unauthorized AI tool usage across your organization. NIST’s AI Risk Management Framework provides actions for generative AI risk management that align with organizational goals.

- Establish Deepfake Verification Protocols: Following CISA’s guidance on deepfake threats, create secondary confirmation channels for high-stakes decisions, financial transfers, and sensitive communications involving executives.

- Deploy AI-Aware Security Training: Train employees to recognize AI-generated phishing attempts, synthetic media manipulation, and suspicious behavior patterns that indicate insider threats leveraging AI tools.

- Monitor AI System Governance: Ungoverned AI systems are more likely to be breached and more costly when they are—implement proper oversight and access controls for all AI deployments.

- Update Incident Response Plans: Traditional playbooks don’t work against AI security risks. Build procedures specifically for AI-enhanced attacks, including isolation protocols for adaptive malware and deepfake incident response.

Frequently Asked Questions: AI Security Risks Insider Threats Deepfakes

How do AI security risks insider threats deepfakes threaten businesses?

NSA, FBI, and CISA report that deepfakes present exponentially increased threats to organizations, enabling impersonation attacks, fraudulent communications, and sophisticated social engineering campaigns targeting employees and customers.

What is shadow AI and why does it increase breach costs?

Shadow AI refers to unauthorized AI tool usage by employees. IBM found that organizations with shadow AI security issues face attacks costing $670,000 more on average due to unmonitored access points and ungoverned AI systems.

What makes AI security risks insider threats deepfakes different from traditional cyber threats?

NIST identifies unique risks posed by generative AI including their ability to adapt and learn from defenses, operate at machine speed, and create synthetic content indistinguishable from legitimate communications.

How quickly should organizations respond to AI security threats?

Organizations need to detect and respond to AI security risks within minutes, not hours. AI-enhanced attacks can escalate exponentially, making automated response systems and pre-planned AI incident protocols essential for limiting damage.

Where can organizations find official guidance on AI security?

The NSA, FBI, and CISA have released official cybersecurity information sheets on deepfake threats, while NIST provides the AI Risk Management Framework for comprehensive guidance on managing AI-related security risks.

The reality is stark: AI security risks aren’t coming—they’re here. While global data breach costs have declined to $4.44 million on average, organizations facing AI-enhanced attacks pay significantly more. But with proper preparation based on official government guidance, you can transform these threats from business-ending disasters into manageable risks. The question isn’t whether AI will impact your security posture, but whether you’ll be ready when it does.

To read more news about cybersecurity and ai click here

You can read more information about this subject:

IBM Security Cost of Data Breach Report 2025