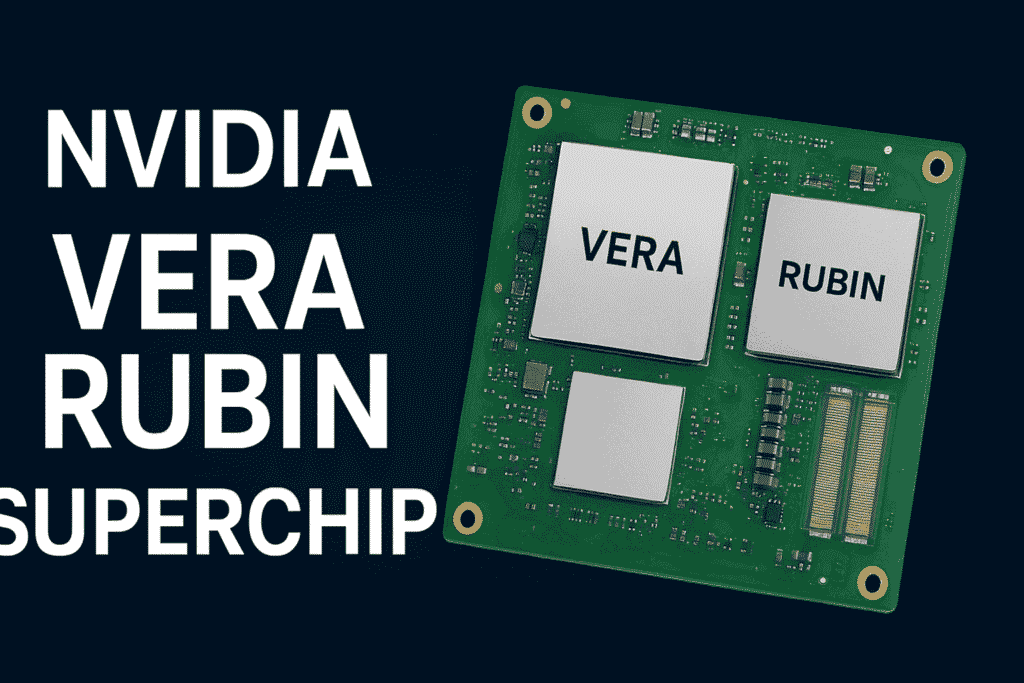

NVIDIA has once again raised the bar in artificial intelligence computing. At GTC 2025, the company unveiled the NVIDIA Vera Rubin Superchip, a groundbreaking platform combining an 88-core ARM-based CPU (“Vera”) and two next-generation “Rubin” GPUs. This powerful system-on-board design is built to redefine how AI data centers process, train, and deploy large-scale models — with up to 100 PFLOPS of AI compute in a single package.

What Is the NVIDIA Vera Rubin Superchip?

The Vera Rubin Superchip is NVIDIA’s next step after the Blackwell architecture, designed to deliver massive computational performance for AI training, inference, and HPC workloads. Unlike traditional systems that connect separate CPUs and GPUs, the Vera Rubin Superchip integrates them directly through ultra-fast NVLink-C2C interconnects, achieving over 1.8 TB/s bandwidth.

Each Superchip combines:

- 1× Vera CPU – 88 custom ARMv9 cores with 176 threads

- 2× Rubin GPUs – each with dual compute dies and 8 HBM4 memory stacks

- HBM4 memory – up to 192 GB per GPU for lightning-fast data access

- SOCAMM modules – LPDDR memory for CPU operations

This integration makes the Vera Rubin platform an “AI system on a board,” capable of unprecedented compute density and efficiency.

Key Specifications

| Component | Details |

|---|---|

| CPU (Vera) | 88 ARMv9 cores, 176 threads |

| GPU (Rubin) | Dual-chiplet design, HBM4 memory |

| Memory Bandwidth | 1.8 TB/s NVLink-C2C |

| AI Compute | Up to 100 PFLOPS (FP4 precision) |

| Transistor Count | ~6 trillion transistors |

| Target Market | Data centers, cloud AI, enterprise AI |

| Production | Expected in late 2026 |

Performance and Capabilities

The Vera Rubin Superchip delivers massive efficiency gains for generative AI and LLMs. By leveraging FP4 (4-bit floating point) precision, NVIDIA enables faster inference speeds with lower power usage — ideal for serving complex models at scale.

When scaled up in server configurations like NVIDIA’s NVL144 systems, these chips can reach 3.6 exaFLOPS of FP4 AI performance, pushing the boundaries of what’s possible in AI computation.

This advancement aligns perfectly with NVIDIA’s ongoing dominance in AI infrastructure — powering everything from ChatGPT-like systems to autonomous driving and robotics.

Why the Name “Vera Rubin”?

NVIDIA named the chip after Vera Rubin, the pioneering astronomer whose work on dark matter transformed our understanding of the universe. It’s a fitting tribute, as the Superchip is designed to illuminate new frontiers in AI — much like Rubin’s discoveries expanded our cosmic horizons.

Market Impact

The Vera Rubin Superchip is not just a technical marvel; it’s a strategic weapon.

- For cloud providers: Expect denser, more efficient AI clusters with lower total cost of ownership.

- For enterprises: Faster training times for large language models and data analytics.

- For NVIDIA: Strengthened dominance in the AI chip market, putting more distance between it and competitors like AMD and Intel.

As AI adoption skyrockets, this chip will likely become the heart of next-generation data centers worldwide.

NVIDIA Vera Rubin vs. Blackwell

| Feature | Vera Rubin Superchip | Blackwell Architecture |

|---|---|---|

| CPU Integration | 88-core Vera CPU | Separate Grace CPU |

| GPU Design | Dual Rubin GPUs | Dual Blackwell GPUs |

| Memory | HBM4 (next-gen) | HBM3e |

| AI Precision | FP4 & FP8 optimized | FP8 optimized |

| Performance | 100 PFLOPS FP4 | 40 PFLOPS FP8 |

| Availability | 2026 | 2024–2025 |

FAQs

1. What is the NVIDIA Vera Rubin Superchip?

It’s NVIDIA’s next-generation AI computing platform combining an 88-core ARM CPU and two Rubin GPUs, capable of delivering up to 100 PFLOPS of AI performance.

2. When will the Vera Rubin Superchip be available?

NVIDIA targets production in late 2026, with large-scale deployments expected in 2027.

3. What makes it different from previous NVIDIA chips?

Unlike previous architectures, the Vera Rubin integrates CPU and GPU in one package with high-bandwidth NVLink-C2C interconnects and next-gen HBM4 memory.

4. What is FP4 precision, and why does it matter?

FP4 (4-bit floating point) precision allows AI models to process more data faster and more efficiently, which is crucial for large-scale inference tasks.

5. Who will benefit from the Vera Rubin Superchip?

Major beneficiaries include cloud AI providers, data centers, AI research labs, and enterprises deploying large AI models.

6. How powerful is it compared to NVIDIA’s Blackwell?

The Vera Rubin delivers over 2× the compute performance of Blackwell with higher energy efficiency and denser integration.

Final Thoughts

The NVIDIA Vera Rubin Superchip represents the next frontier of AI computing — a fusion of CPU and GPU power in a single, high-efficiency module. Its launch marks another milestone in NVIDIA’s relentless push to dominate the AI infrastructure landscape. With its immense processing power and revolutionary design, the Vera Rubin Superchip could become the backbone of the next generation of artificial intelligence.

You can also read more about this subject from here

To read more news about technology click here

And to read more about AI click here